Are you looking to significantly improve your website’s conversion rates? A/B testing is a powerful technique that allows you to systematically optimize your website design and content, leading to increased engagement and ultimately, higher sales or other desired outcomes. This guide will provide a comprehensive understanding of A/B testing methodology, offering practical strategies to implement effective tests and analyze the results to drive substantial improvements in your website’s performance. Learn how to conduct successful A/B tests and unlock your website’s full conversion potential.

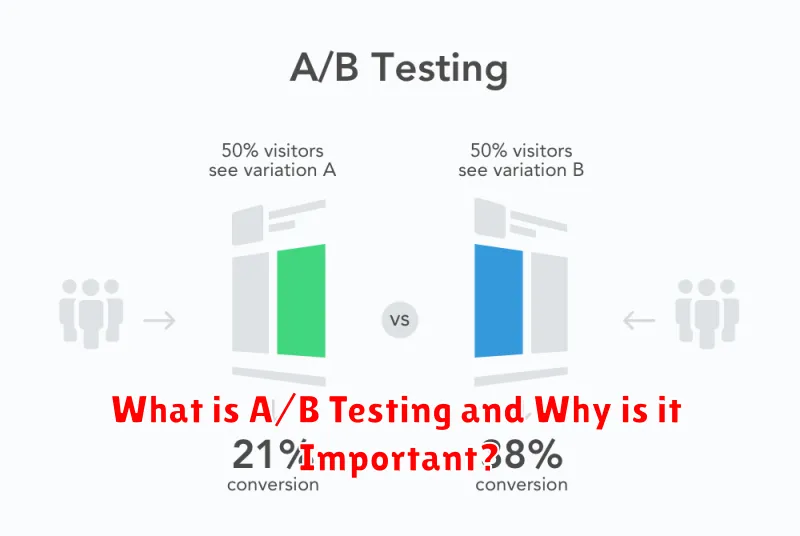

What is A/B Testing and Why is it Important?

A/B testing, also known as split testing, is a method of comparing two versions of a webpage or app against each other to determine which performs better. This involves showing Version A to one group of users and Version B to another, then analyzing the results to see which version achieves a higher conversion rate (e.g., more purchases, sign-ups, or clicks).

Its importance stems from its ability to provide data-driven insights into user behavior. Instead of relying on guesswork or assumptions, A/B testing allows businesses to objectively measure the effectiveness of different design choices, copy variations, or calls-to-action. This data-driven approach helps optimize websites and applications for better performance, leading to increased conversions and ultimately, improved business outcomes. By making informed decisions based on real user data, businesses can significantly improve their ROI and achieve their marketing goals more efficiently.

In essence, A/B testing is crucial for minimizing risk, maximizing efficiency, and ensuring that online experiences are tailored to user preferences for optimal results. It’s a fundamental tool for any organization serious about data-informed decision-making and continuous improvement.

How to Identify Key Metrics for A/B Testing

Successful A/B testing hinges on selecting the right key metrics to track. These metrics should directly reflect your website’s primary goals. For example, if your goal is increased sales, then conversion rate (percentage of visitors completing a purchase) is a crucial metric. If your focus is lead generation, lead submission rate becomes paramount.

Beyond primary goals, consider secondary metrics that offer insights into why your primary metrics change. These could include bounce rate (percentage of visitors leaving after viewing only one page), average session duration, and click-through rates (CTR) on specific elements. Analyzing these secondary metrics helps you understand the user experience and pinpoint areas for improvement.

Avoid tracking too many metrics simultaneously. Focus on a few key performance indicators (KPIs) that are directly tied to your objectives. Too many metrics can obscure the results and make it difficult to draw meaningful conclusions. Prioritize metrics that are actionable; those that you can use to directly inform website improvements.

Before starting your A/B test, clearly define your success criteria based on your chosen metrics. This will ensure you can accurately measure the impact of your changes and determine whether the test resulted in a statistically significant improvement.

Best Tools for A/B Testing

Choosing the right A/B testing tool is crucial for successful website optimization. The best tool for you will depend on your specific needs and budget, but several stand out for their features and ease of use.

Google Optimize offers a free option with robust features, making it ideal for beginners and smaller businesses. Its integration with Google Analytics provides valuable data insights. However, it lacks some advanced features found in paid platforms.

For more advanced testing and sophisticated features, Optimizely is a strong contender. It offers comprehensive A/B testing capabilities, including multivariate testing and personalization, but comes with a higher price tag.

VWO (Visual Website Optimizer) provides a user-friendly interface and powerful analytics, making it suitable for both beginners and experienced marketers. Its feature set is comparable to Optimizely, offering a good balance between functionality and cost.

AB Tasty is another popular choice, known for its strong personalization features and advanced targeting options. It’s a good option for businesses needing granular control over their testing campaigns.

Finally, Convert Experiences offers a comprehensive suite of experimentation tools, including A/B testing, personalization, and multivariate testing. It’s known for its robust reporting and analytics dashboards.

Ultimately, the best A/B testing tool will depend on your specific requirements. Consider factors like budget, technical expertise, and the complexity of your testing needs when making your selection. Many platforms offer free trials, allowing you to explore their capabilities before committing.

How to Test Landing Pages for Higher Conversions

Effective landing page testing is crucial for maximizing conversions. A/B testing is your primary tool. This involves creating two versions of your landing page – a control (your current page) and a variation (with one element changed). You then direct traffic to both versions and analyze which performs better based on key metrics like conversion rate, bounce rate, and time on page.

Focus your tests on key elements. Experiment with different headlines, calls to action (CTAs), images, form fields, and overall page layout. Start with one change at a time to accurately isolate the impact of each modification. Use analytics tools to track performance and determine a statistically significant winner.

Hypothesis driven testing is key. Before each test, formulate a clear hypothesis about what change will improve performance. This ensures your tests are focused and effective. For example, “A headline emphasizing benefits will increase click-through rates by 15%.” After the test, analyze the results and iterate based on your findings. Continuously refine your landing pages based on data-driven insights.

Remember sample size is important for reliable results. Insufficient data can lead to inaccurate conclusions. Use a sample size calculator to determine the number of visitors needed for statistically significant results. Finally, consider using multivariate testing for more complex experiments involving multiple variations of several elements simultaneously.

Optimizing Call-to-Action (CTA) Buttons

Call-to-action (CTA) buttons are crucial for driving conversions on your website. A/B testing allows you to optimize them significantly. Experiment with different button colors, finding one that contrasts effectively with your website’s design and stands out.

Button text is equally vital. Test various options, focusing on clear and concise language that compels action. Instead of generic calls like “Submit,” try more specific and benefit-oriented phrases such as “Get Your Free Quote” or “Download Now.”

The size and placement of your CTA buttons are also important factors. Test different sizes to find one that’s prominent without being overwhelming. Strategically place buttons where users are most likely to see and interact with them, often after they’ve engaged with relevant content.

Finally, consider testing different button shapes (e.g., rounded vs. square) and using strong verbs to create a sense of urgency and encourage immediate action. Analyzing the results of your A/B tests will reveal which CTA button variations are most effective in driving conversions.

The Impact of Page Load Speed on Conversion Rates

Page load speed is a critical factor influencing conversion rates. Studies consistently demonstrate a strong correlation between faster loading times and improved user experience, leading to higher conversions. Slow loading pages frustrate visitors, causing them to abandon the site before completing desired actions.

Even a slight improvement in load time can significantly impact your bottom line. A delay of just a few seconds can result in a noticeable decrease in conversions, leading to lost revenue and missed opportunities. Conversely, optimizing for speed can dramatically increase engagement and boost sales.

A/B testing provides a powerful method for measuring the impact of page load speed optimizations. By comparing conversion rates between a control group (with the original page speed) and a test group (with improved speed), you can quantify the effect of speed improvements on your overall conversion rates. This data-driven approach allows for informed decisions on how best to invest in website performance enhancements.

Therefore, prioritizing page speed optimization through techniques like image compression, code minification, and efficient server configuration is crucial for maximizing conversion rates and achieving business goals. A/B testing enables you to scientifically validate the impact of these optimizations.

How to Analyze and Interpret A/B Test Results

Analyzing A/B test results involves several key steps. First, determine your sample size. Insufficient data can lead to inaccurate conclusions. Ensure your test ran long enough to gather statistically significant results. Tools often provide this information.

Next, examine the key metrics. Focus on your primary metric (e.g., conversion rate) to understand if there’s a statistically significant difference between your variations. Look at statistical significance (p-value) and effect size (e.g., lift percentage). A low p-value (typically below 0.05) indicates a statistically significant result; a higher effect size shows a more substantial impact.

Consider confidence intervals. These provide a range within which the true effect likely lies. A wider interval signifies more uncertainty. Analyze secondary metrics to assess the overall impact of changes. For example, if conversion rate improved, but time on site decreased significantly, you might need further analysis.

Finally, interpret your findings holistically. Don’t just focus on numbers; consider the business context. Even a statistically significant improvement might not be worthwhile if the increase is minimal compared to the cost or effort. Document your findings, including the methodology, results, and conclusions, for future reference.

Common Mistakes to Avoid in A/B Testing

Successfully conducting A/B tests requires careful planning and execution. One common mistake is insufficient sample size. A test with too few visitors won’t yield statistically significant results, leading to inaccurate conclusions. It’s crucial to use a sample size calculator to determine the appropriate number of participants.

Another frequent error is testing too many variables simultaneously. Multivariate testing is more complex and harder to interpret. Focus on testing one element at a time (e.g., headline, button color) to isolate the impact of each change.

Ignoring statistical significance is another pitfall. Don’t rely solely on raw conversion rates. Use statistical tests (like chi-squared or t-tests) to determine if the observed differences are truly significant and not due to random chance. A statistically significant result ensures reliable conclusions.

Running tests for too short a period is detrimental. Website traffic fluctuates, and short tests may not capture the full impact of the changes. Allow sufficient time for each variation to collect enough data, considering daily and weekly traffic patterns.

Finally, failing to properly segment your audience can lead to skewed results. A/B testing should be targeted to specific user groups based on demographics or behavior to optimize conversions for relevant segments. Avoid applying blanket changes across all users.